AI Engineering's Full-Stack Problem

I can’t help but notice AI Engineering following a familiar pattern in tech - that creeping expectation for one role to handle everything. It really hit me while revisiting Chris Coyier’s (of css-tricks.com) 2019 keynote “ooooops I guess we’re* full-stack developers now”. He outlined how JavaScript developers went from frontend specialists to full-stack generalists, and I’m starting to see the same unsustainable pattern emerge in AI.

Honestly, for most of my career, I haven’t worried too much about this expanding-role problem. My thinking has been: if I can add value somewhere, why not? Sometimes that works out fine, especially when you’ve got good support - maybe a leader who shields you from the chaos, or a solid team to lean on.

But now we’re seeing this pattern explode in the AI world. As companies race to integrate AI capabilities, they’re creating positions that look more like wishlists for tech superheroes than realistic roles. It’s feeling a lot like the full-stack JavaScript evolution all over again, just with higher stakes.

Enter AI Engineering…

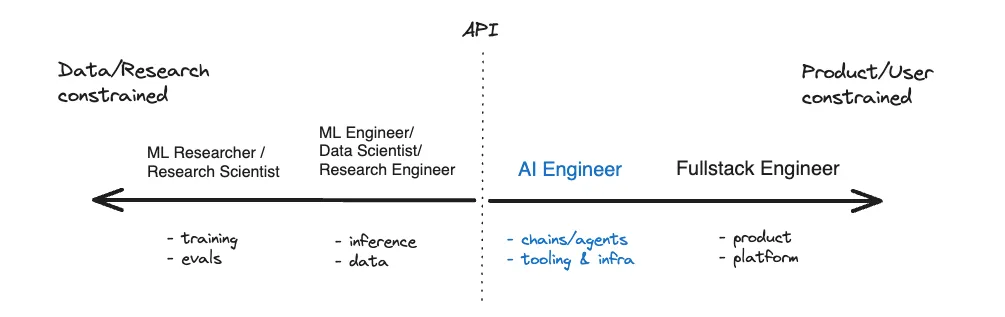

Back in 2023, Latent Space dropped an article called “The Rise of the AI Engineer” that really resonated with folks jumping into AI (funny enough, many coming from JavaScript backgrounds). It challenged how we typically think about AI development, suggesting we needed a different kind of engineer - someone focused more on getting stuff done than knowing all the theory.

Most people still consider AI Engineering as a form of either Machine Learning or Data Engineering, so they recommend the same prerequisites. But I guarantee you that none of the highly effective AI Engineers I named above have done the equivalent work of the Andrew Ng Coursera courses, nor do they know PyTorch, nor do they know the difference between a Data Lake or Data Warehouse

This is where it gets interesting: AI Engineering isn’t just about ML expertise anymore - it’s becoming this catch-all role that needs to know a bit of everything, much like we saw with full-stack development.

So, what do AI Engineers do?

That’s the million-dollar question - one that even the original Latent Space article dances around. What exactly is this job? What does the day-to-day look like? What do companies actually expect?

Latent Space took another crack at this question when they got James Brady and Adam Wiggins on their podcast. They dug into what these roles really look like and why they’re so hard to pin down.

Here’s their take on it (not the whole picture, but pretty telling):

This sparked some interesting discussion on Twitter:

I got to work with @james_elicit on writing down Elicit's hiring practices for AI skills!

— Adam Wiggins (@_adamwiggins_) June 22, 2024

Some surprising takeaways:

• Language models are a medium that is fundamentally different from other compute mediums

• But AI engineers are not ML experts, nor is that their best…

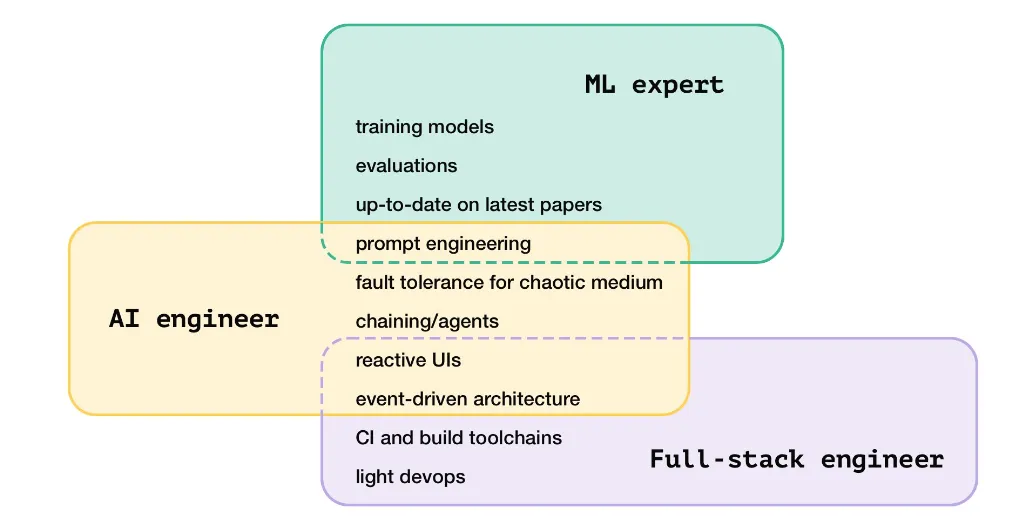

The full article and podcast lay out what companies are looking for, and it’s quite a list:

- Understanding and experience in backend development

- Light devops and an understanding of infrastructure best practices.

- Queues, message buses, event-driven and serverless architectures, … there’s no single “correct” approach, but having a deep toolbox to draw from is very important.

- A genuine curiosity and enthusiasm for the capabilities of language models.

- An understanding of the challenges that come along with working with large models (high latency, variance, etc.) leading to a defensive, fault-first mindset.

They even test for browser API knowledge in interviews - because why not add that to the pile, right? 🙂

Are AI Engineers falling into the age-old trap?

Here’s where it gets tricky - everything I’ve talked about so far comes from AI-focused companies like Elicit and Latent Space. But as regular companies start adopting AI, they’re asking even more from these roles. When a company can only hire one or two AI people, guess who has to do everything from training models to building the frontend?

Picture this: you start your morning fine-tuning a language model, spend lunch wrestling with Kubernetes, and end your day building React components to show off your AI’s output. Sure, you’re doing a lot - but are you getting really good at any of it?

Here’s what companies need to think about:

- Setting clear boundaries for what AI Engineers should (and shouldn’t) handle

- Being realistic about what their AI projects actually need

- Investing in tools that make the technical stuff easier to manage

I’ve tried to keep this pretty objective and stick to what others are saying, but I can’t help sharing a bit of personal experience here 🙂. As someone in this field, I constantly struggle with the “go deep or go wide” question. It’s tempting to just fill in wherever there’s a gap - devops, infrastructure, maintenance, UI work - but that can become a black hole that pulls you away from what probably got you excited about AI in the first place: the actual AI stuff.

The big question we need to answer is how to balance knowing a little about everything with being really good at the important stuff. If we’re not careful, we’ll end up with another “full-stack” situation that just doesn’t work - and this time, the stakes are even higher.